A security #Meltdown, also for embedded systems?

Meltdown and Spectre are considered by many to be the biggest security flaws in the history of computing, both in terms of numbers of affected devices (billions) and time they have been laying dormant (20 years). Whenever security issues like these that affect PCs and mobile devices become public, we take a look at how they might affect Embedded Systems as well. An inconvenient truth in our industry is that software in Embedded Systems does not get updated, to put it mildly, as often as regular desktop PCs. Sometimes that means “never”. That is why even “ancient” attack vectors like the WannaCry and its descendants such as Petya and NotPetya ramsomware can still cause major damage in various systems, even months or years after the underlying security issues have been made public.

Meltdown and Spectre are considered by many to be the biggest security flaws in the history of computing, both in terms of numbers of affected devices (billions) and time they have been laying dormant (20 years). Whenever security issues like these that affect PCs and mobile devices become public, we take a look at how they might affect Embedded Systems as well. An inconvenient truth in our industry is that software in Embedded Systems does not get updated, to put it mildly, as often as regular desktop PCs. Sometimes that means “never”. That is why even “ancient” attack vectors like the WannaCry and its descendants such as Petya and NotPetya ramsomware can still cause major damage in various systems, even months or years after the underlying security issues have been made public.

The core issue behind Meltdown and Spectre is that parts of a memory protection and isolation system are being compromised on a hardware level. Such isolation is meant to ensure that one task or program can not access the memory used by another task or program and potentially spy out sensitive information. The “good news” for most older chips and many embedded microcontroller devices first: They often don’t have a vulnerable memory isolation logic (involving out-of-order or speculative code execution) in the first place. It is actually worse: The memory in most lower-end embedded chips is wide open to all running tasks. While some microcontrollers do provide an MPU (Memory Protection Unit, see ARM Community for an example), it is often limited in terms of number of memory areas, sizes and number of levels/tasks supported. From our experience it is safe to say that a large number of embedded applications doesn’t make use of it at all. And when an MPU is used, then the primary goal is often to protect code against memory-crossing bugs to make it safer against failure, but not attacks. With these types of systems, once a hacker manages to execute some code on an embedded device, this code should be assumed to immediately have access to all resources of the chip, including the memory.

This looks like a devastating assessment from a security standpoint, however, injecting code into an embedded microcontroller is not easy. Many such systems do not use an operating system at all, have no command line or only a very limited user interface without the option to load and start a piece of code. Typically the only way to inject code is through a bootloader or a debug interface, if at all. It is up to the system designers, sometimes the factory programming and the program running on an embedded microcontroller to disable casual access to these functions.

We know that for many designers of embedded systems, the time they can spend on security issues is limited. If you are part of this group, you may use the publicity around Meltdown and Spectre to justify some extra time to review potentially vulnerabilities to attacks that are based on the same principle: to load or inject malicious code that spies out or manipulates data in your embedded system.

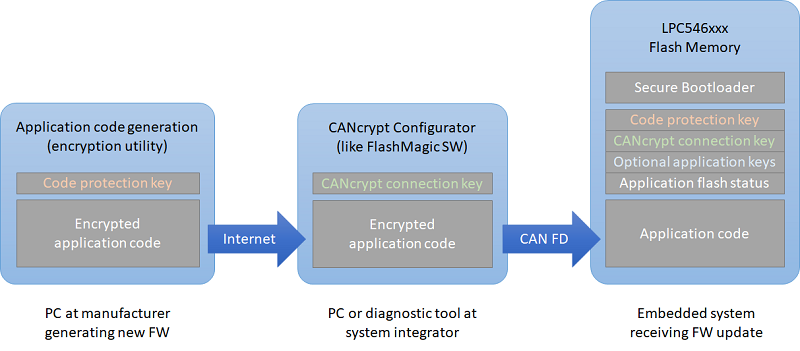

For such a review, first look for all options how code could be injected into your system or altered. Could an attacker make use of any of the provided bootloader mechanisms or the debug interface? If you can’t disable all of these because you need to be able to update “legitimate” code, then authentication is mandatory and encryption during transmission highly recommended. Preferably implement different layers of authentication, for example one to access the interface to update code and another one to protect the code itself. For an example see the secure secondary bootloader we implemented for NXP. Also, review if your microcontroller has a MPU or similar and how you can make best use of it not only to protect the system from buggy code but also from intentional attacks.

Deutsch

Deutsch English

English

Learn about our current product range for embedded systems

Learn about our current product range for embedded systems

Embedded Networking with CAN and CANopen. Your technology guide for implementing CANopen devices.

Embedded Networking with CAN and CANopen. Your technology guide for implementing CANopen devices. Implementing scalable CAN security. Authentication and encryption for higher layer protocols, CAN and CAN-FD

Implementing scalable CAN security. Authentication and encryption for higher layer protocols, CAN and CAN-FD